Investigating az-cli performance on the hosted Azure Pipelines and GitHub Runners

I've been building a few more workflows and pipelines over the past few days and had been experimenting with the az-cli. And I've been running into all kinds of performance issues.

Azure CLI is a great nifty tool to chat to Azure as well as Azure DevOps and there's a AzureCLI@v2 task in Azure DevOps that preconfigures your Azure subscription and all.

While testing I got increasingly frustrated by how slow az is on GitHub Actions and Azure Pipelines hosted runners. But only on Windows. So, I've been digging into why.

Over the past week I've found quite a few issues, filed a few issues and submitted a couple of pull requests. I'll walk you through my findings, they may help improve the performance of your workflows too!

Update: A number of pull-requests are making their way to the public runners.

✅ actions/runner-images/8410 - Warms upaz devops, installs Python's keychain package and runaz devopswarmup on Windows

✅ actions/runner-images/8410 - Redirects all temporary/cache files to a central folder, runs modules during warmup on Windows.

🔁 actions/runner-images/8441 - Redirects all temporary files to a central folder, installs Python's keychain package and runs modules during warmup on Ubuntu.

🔁 azure/azure-cli/27545 - Fixes case-sensitive check against the command index and prevents unwanted index invalidation.

✅ actions/runner-images/8388 - Disables Windows Storage Service.

✅ actions/runner-images/8431 - Disables Windows Update & Windows Update Medic services

🔁 azure-pipelines-tasks/19209 - AzureCLI@2 task ignores existing optimizations on hosted agent

With these changes, first-call performance is now less than ~15s instead of ~60s or more on Windows.

Case matters

It turns out that the casing of your commands matters when it comes to az. You probably wouldn't expect it, az version will take about 12 seconds to run on the hosted runner including the overhead of starting a fresh powershell session.

But az Version will take about one minute. Why? Well, it turns out that az caches all the commands that are available from its many plugins, and that it looks up the command by key to see if it recognises what you've passed in. It performs that lookup case sensitive.

Issue filed:

For now, make sure you call az devops and not az DevOps.

Darn you autocorrect for correcting devops to DevOps all the time!

az devops login vs AZURE_DEVOPS_EXT_PAT

To authenticate to Azure DevOps, you have a few options to chose from. You can use AzureCLI@2 with an Azure service connection or add az devops login to your script or pass a token through the AZURE_DEVOPS_EXT_PAT environment variable.

Configuring your Azure Service Connection is the slowest of the bunch. Followed by az devops login where as the environment variable should be the fastest of the bunch.

But it turns out to be much more complex. That's because az devops login will try to install python's keyringpackage the first time it runs, and on the Hosted runners, the command has never been called before, thus adding 10-20s to the first call.

Collaborated on a pull request to force keyring installation during image creation:

And filed an issue to make sure this package is included in az devops by default:

So you might think that setting AZURE_DEVOPS_EXT_PAT will be the fastest, but what happens under the hood might be surprising: az devops will first try to run az login and az devops login before attempting to use the environment variable, even if you explicitly pass it in. There should be a way to force the configuration used.

Filed an issue:

First run of az on the hosted runner always causes a rebuild of the command index

Similar to the case matters issue above, the first time az is invoked on the hosted runners a command index rebuild is triggered. Why? Because the command index doesn't exist in the user profile of the user that runs your workflow. These files are normally stored in your profile directory under ~/.azure and ~/.azure-devops, but since your workflow runs in a pristine user profile, these cached files are gone.

Submitted a pull request to move these folders to a persistent location:

This is being resolved by the following pull request:

First run of az on hosted runner causes python compilation

Because the image generation code never actually runs az and az devops and because the ~/.azure directory and ~/.azure-devops aren't made available to the user that runs the workflow; the first run compiles several python modules and caches the result.

This is also fixed by setting environment variables and calling az --help and az devops --help during image generation.

Submitted a pull request to move these folders to a persistent location and warm-up az and az devops:

Some of the data that az devops needs isn't stored under ~/.azure, but has its own config path under ~/.azure-devops. While trying to redirect that folder and the underlying ./python/cache folder I found out that not all the ways you can redirect these folders have the exact same effect, because the cache folder location is computed in different ways.

So, I filed another issue:

Default configuration of az

The default configuration of az leaves several settings enabled or unconfigured:

- Telemetry

- Disk logging

- Progress bars

- Console colors

- Survey prompts

- Auto-upgrade

There is a pretty unknown az init command which will prompt whether you want to run in an interactive or an automation environment and it forces a number of these settings to off.

So, I filed a pull request to do the same thing on the Hosted Runner:

These changes are currently under consideration as they don't have the same impact as the other changes mentioned above (some could be breaking). You could set these in your own workflows if you'd like.

And filed a pull request to turn off auto-upgrade in az init as well:

AzureCLI@2 does a version update check

When you use the AzureCLI@2 task, the first thing it does is running

az --versionWhich in turn performs and update check and caches the results, but as you can guess by now, that cached result isn't available in the pristine profile of the user running your workflow.

I feel that AzureCLI@2 has no business doing an update check anyway and it turns out that there is a second command az version (note the lack of --). It mostly does the same thing but skips the update check.

Filed another pull request:

And while it's nice that the alternative command exists, it would seem better to me to make the version-check configurable:

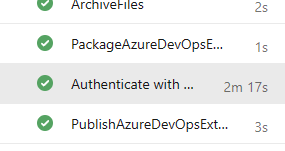

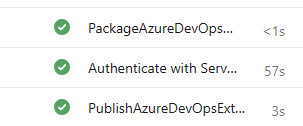

AzureCLI@2 task ignores pre-cached data by default on the Hosted Agent

The AzureCLI@2 task, by default, overrides the AZURE_CONFIG_DIR environment variable and redirects the folder containing the configuration to the agent's temp directory. By doing so the task explicitly ignores the pre-cached data on the agent.

You can prevent it from doing this by adding useGlobalConfig: true to the task:

- task: AzureCLI@2

inputs:

scriptType: 'pscore'

scriptLocation: 'inlineScript'

useGlobalConfig: true # <- Saves 1 minute on hosted agent.In my case the execution time drops from 2:17 to 0:57. Another 1:20 saved.

useGlobalConfig: true

useGlobalConfig: trueI've filed an issue with a proposed change to at least warn about the potential time waste or to not change the AZURE_CONFIG_DIR when running on the hosted agent by default:

Auto-detecting your Azure DevOps Organization and Project is slower

Many az devops commands need to know the location of your Azure DevOps organization and the name of the project you're connecting to. If you don't explicitly pass these in, az devops will try to run git remote --verbose to look up the details. This adds another little bit of overhead.

Since Azure Pipelines already knows the organization and the project and sets environment variables for you, you might as well pass them in explicitly:

- pwsh: |

az pipelines runs show --id $env:BUILD_BUILDID --query "definition.id" `

--organization $env:SYSTEM_COLLECTIONURI `

--project $env:SYSTEM_TEAMPROJECTIt would be nice if --detect would use these environment variables if they are available.

Issue filed:

On Azure Pipelines everything runs inside PowerShell 5.1

In Azure Pipelines everything except Node based tasks run inside PowerShell 5.1. Even a Windows Shell task (CmdLine@2) runs inside of PowerShell 5.1:

Starting: CmdLine

==============================================================================

Task : Command line

Description : Run a command line script using Bash on Linux and macOS and cmd.exe on Windows

Version : 2.212.0

Author : Microsoft Corporation

Help : https://docs.microsoft.com/azure/devops/pipelines/tasks/utility/command-line

==============================================================================

##[debug]VstsTaskSdk 0.9.0 commit 6c48b16164b9a1c9548776ad2062dad5cd543352

##[debug]Entering D:\a\_tasks\CmdLine_d9bafed4-0b18-4f58-968d-86655b4d2ce9\2.212.0\cmdline.ps1.

========================== Starting Command Output ===========================

##[debug]Entering Invoke-VstsTool.

##[debug] Arguments: '/D /E:ON /V:OFF /S /C "CALL "D:\a\_temp\5cf768ae-b47d-41b4-a176-02a9957e0e28.cmd""'

##[debug] FileName: 'C:\Windows\system32\cmd.exe'

##[debug] WorkingDirectory: 'D:\a\1\s'Unlike GitHub Actions, even pwsh runs inside of PowerShell 5.1 in Azure Pipelines. This makes script: and pwsh: and a tiny bit slower on Azure Pipelines than using powershell:

But under certain conditions (like the one below), this tiny bit slower can add a quite noticeable 6s to 10s additional delay.

I requested that a proper PowerShell Core Execution handler is added to the Azure Pipelines Agent.

Windows might be trying to be helpful

While chatting with an engineer on the actions/runner-images team, we talked about a few issues that have been plaguing the hosted runner recently. It turns out that Windows Server has added a few more ways to ensure that Windows Update always runs and to run a few jobs to keep Windows performing at its best.

The hosted runner images are updated very frequently and when the image runs, it only needs to run one job before it's deleted. As such, these services are disabled during the generation of the Windows runner images.

Until recently that is.

A change in Windows has caused the "Storage Service" and the "Windows Update Service" to be re-enabled and restarted as soon as the image boots up. And if you're unlucky they slow down the hosted runner while doing their intended job.

A new image will be rolling out shortly, one that again turns off these services, this time a little more forceful:

In the meantime, you can use this snippet in your workflow to turn these services back off:

Azure Pipelines:

# 🔁 Azure Pipelines

steps:

- pwsh: |

$services = @("WaasMedicSvc", "StorSvc", "wuauserv")

$services | %{

$action = "''/30000/''/30000/''/30000"

$output = sc.exe failure $_ actions=$action reset=4000

stop-service $_ -force

if ($_ -ne "WaasMedicSvc")

{

Set-Service -StartupType Disabled $_

}

if ((get-service $_).Status -ne "Stopped")

{

$id = Get-CimInstance -Class Win32_Service -Filter "Name LIKE '$_'" |

Select-Object -ExpandProperty ProcessId

$process = Get-Process -Id $id

$process.Kill()

}

}

$services | %{

write-host $_ $((get-service $_).Status)

}

- checkout: selfGitHub Actions:

# ▶️ GitHub Actions

steps:

- script: |

$services = @("WaasMedicSvc", "StorSvc", "wuauserv")

$services | %{

$action = "''/30000/''/30000/''/30000"

$output = sc.exe failure $_ actions=$action reset=4000

stop-service $_ -force

if ($_ -ne "WaasMedicSvc")

{

Set-Service -StartupType Disabled $_

}

if ((get-service $_).Status -ne "Stopped")

{

$id = Get-CimInstance -Class Win32_Service -Filter "Name LIKE '$_'" |

Select-Object -ExpandProperty ProcessId

$process = Get-Process -Id $id

$process.Kill()

}

}

$services | %{

write-host $_ $((get-service $_).Status)

}

- uses: actions/checkout@v4Some people might blame Microsoft in general for these issues, but of course this is an interaction caused by changes made by 2 teams with hugely different goals. Where the Windows team is trying to make Windows as secure and up to date as possible, the Runners team is trying to create an image of Windows that is as lean as it can be, up-to-date, secure, yet can run 100s of different tools ranging from .NET 4 to .NET 8 and from Python to Rust as well as Node and PHP. Strange interactions are almost impossible to prevent.

az devops caches instance level data

Whenever you connect to an Azure DevOps Organization, az devops will make 2 calls:

OPTIONS /{{organization}/_apis

GET /{organization}/_apis/ResourceAreasThese calls return all the endpoints and their calling convention. But these are unlikely to change often. I've seen these calls adding 10-30s to the first call to az devops that make an API call. So, I went looking for ways to cache the data.

I haven't found the ideal solution yet, but this is a working prototype that will make az devops reuse the data from a previous workflow run:

# ⚠️ WARNING work in progress

steps:

- task: Cache@2

inputs:

key: '".az-devops"'

path: 'C:\Users\VssAdministrator\.azure-devops'

displayName: "Restore ~/.azure-devops"

- pwsh: |

(dir C:\Users\VssAdministrator\.azure-devops\python-sdk\cache\*.json) | %{$_.LastWriteTime = Get-Date}

displayName: "Reset last modified on ~/.azure-devops/cache"Unfortunately, it doesn't work under all circumstances yet as the files may not always be there. And the pull request that will change the location of the .azure-devops folders, will change the paths in the snippet above.

It might be worth creating a custom task that caches these files. Unfortunately, custom tasks can't seem to access the cache in Azure Pipelines (or at least not through a mechanism in the agent or task-lib)

I've filed an issue to allow tasks to cache data:

Conclusion

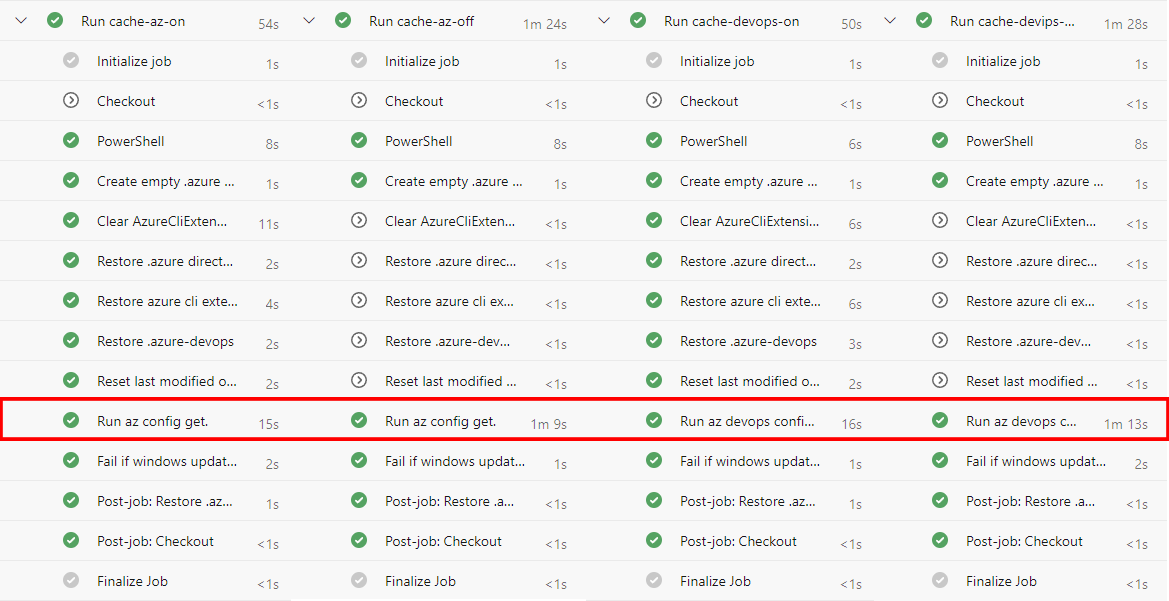

You can see results of all these changes below:

The combined set of changes reduces the time it takes to run az for the first time in a workflow by about 1 minute. Even with the added overhead of setting up the user profile the whole workflow is faster.

Some of these issues aren't exclusive to Windows either. The case sensitivity issue, keyring installation, Azure CLI's default settings and auto-detection of Azure DevOps Organization apply to Ubuntu and MacOS runners too.

If you want to tinker a bit with these things yourself, here is an Azure Pipelines file that I used to test most of these scenarios.

The Hosted Runner is a complex thing. It's built for convenience and has 100s of tools preinstalled on it. Many of these tools have funny interactions with each other. All of those are running an operating system that is also constantly changing.

Many of the tools we rely on to develop and deploy our code are flexible, extensible, and configurable and aren't always designed to run on a temporary server that executes the tool just once.

At the same time, az must run thousands, if not millions of times each day on the Windows Hosted runners and the issues above cause it to regularly run more than a minute longer than needed. I'm hoping that the work I've put in this week will not only speed up your workflows, but will save us all time and money. And - above all - it will hopefully reduce carbon emissions and water usage.

This investigation dug mostly into a single tool and its extensions. There may be an unknown number of similar issues lurking in the set of tools you rely on. Please do take the time to investigate and file issues, submit pull requests, and help improve these systems for all of us.

A huge shoutout to ilia-shipitsin for looking into these issues and testing them on actual hosted runners.

If these fixes are making your pipelines a little faster, consider sponsoring me to allow me to do these kinds of things more often: